Motion Blending

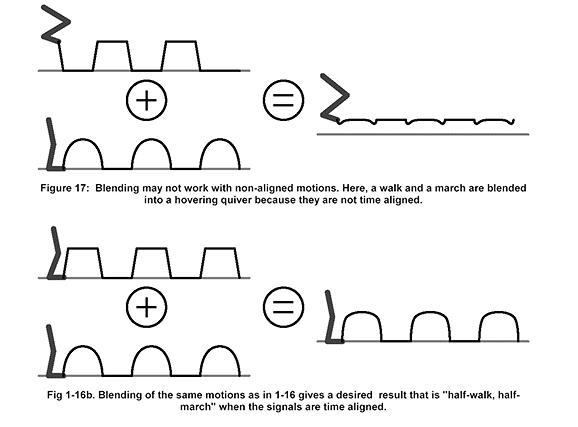

Motion Blending is the most popular choice by the large effect houses when creating crowd scenes that require more than one type of motion within the same shot and without having to capture every possible combination of moves for the Motion Library. Motion Blending can be taken to different extremes of complexity, but in its plainest form, is a very simple process, which seems to work better in practice than in theory. It is the process of adding together motion data sets and interpolating between them in order to create a new data set. The success of the blend is highly dependent of the finishing pose of the first motion and the starting pose of the second motion. For example, blending a pose from a walking motion that has the foot at the top of the step and at the bottom of the step leads to the foot being in the middle, which may be acceptable for one pose, but not for a motion, as illustrated below.

Motion Capture and Editing: Bridging Principles and Practices, M. Jung et al (2000)

As you can see from the diagram, it is important that the two motions are time aligned, which means that the key events of the motion must occur and the same time. Rather than an animator spending time adjusting the timing of one or both of the motions, which we have already established from the Motion Data Editing section is laborious, a process called Time Warping can be used to realign the key events of the motions. Time warping is often used in order to get animations to happen at set times, by effectively scaling different parts of time in different ways to achieve the desired result, but the it can also be used for the purpose of automatic motion blending.

Automatic motion blending is an approach that most of the large effects house have already implemented in some form or another. The basic idea is explained below by Julian Mann, Head of Research at The Moving Picture Company.

"The foundation idea is to take an arbitary amount of motion capture data consisting of many different movements and actions. EMILY (MPC's Proprietary Software), then breaks this down into tiny clips of motion - each 8-12 frames long. With thousands of tiny clips, EMILY looks at the pose of the skeleton and compares it with every other clip and then decides which it could blend with. Once it finds clips that can blend or merge together, the system automatically creates the blend and writes them out to disk. All of this information is stored in a giant graph indicating compatible clips." (Interview with Julian Mann from CGNetworks.com, 2004)

The graph system mentioned by Julian Mann was an idea presented at SIGGRAPH 2002, by Lucas Kovar, Michael Gleicher and Frederic Pighin, called Motion Graphs. The idea effectively generates transitions to connect motions that were not designed for use with each other, thereby, dramatically increasing the content of the Motion Library. With the use of descriptive labels at the time of capture, such as, "walking", "throwing" etc. and some constraints (left heel planted), similar frames between each pair of motions can be identified . Similarity involves joint velocities and accelerations in addition to body posture. These similar frames become the nodes of the graph. Nodes are effectively choice-points for selecting a different motion. Transitions between similar frames in different motions are automatically generated by a linear interpolation of the root position and a spherical interpolation of joint positions. After making the graph more efficient by pruning dead-end nodes the motion library can be connected to the crowd system and read in movements for the characters. If a character needs to make a left turn, the motion library automatically loads up a clip that makes a left turn. The more data in the library, the larger the selection of clips that makes a left turn.

So what is the need for anything else?

Firstly, the process of capturing all the data, can be an expensive and time consuming exercise. All the motions have to be meticulously planned before hand, since it may be the only chance to capture the motion and forgetting to capture a certain action could prove costly. However well you plan, there is nearly always something that you would do again if you had the chance. Setting up scenarios, such as, climbing up ladders and interacting with props are very difficult to capture and it is not feasible to anticipate all possibile combinations needed. The mocap performer may not have the same size or proportions as the character being animated, which then creates a retargetting problem.. The peformer can only do what is humanly possible, which can be inconvenient if the characters are not human and are able break the restrictions of reality by jumping miles in the air or sticking to wall, like the characters in I, Robot, which was created by Digitial Domain using the Massive crowd system. The most important issue with this technique is when it comes down to it the amount of motions is solely dictated by the amount of motion clips in the motion library, so other techniques need to be investigated.